I have to start this off with how I obtained a copy of NDepend Professional as it was actually a little funny. As I was going through and cleaning out email I came across an email that looked like it could be spam, but I usually don’t see spam offering licenses for software development tools. Taking a chance and opening it, there were some incorrectly spelled words and some odd grammar, but overall everything seemed like it was from Patrick Smacchia through @NDepend support. Taking a chance and responding to the email, it was actually was valid. Sometimes it does pay to actually follow up on “spam”. Purchasing a license for myself was already on my list for this year, so although I received the license for free (and thus the disclosure at the end of the article), it was already on my radar of a worthwhile product.

So being a regular follower of Ayende Rahien and enjoying his reviews of projects, I decided that RavenDB would be the first project I try running through NDepend. Being the bleeding edge kind of developer I like to be, I went out and pulled down the repository for changes through 2/8/2013 on the master branch.

While that was being pulled down, I went out and downloaded NDepend from the website. Considering that there’s a license file for Professional edition that needs to be saved along side the executable, I’m not sure I agree with the need to enter a License ID in order to download the program. Anyway, I proceeded with the download and found it was a zipped folder. Apparently the install instructions are basically an XCopy deployment. That’s useful I suppose, but there’s mention not to put it into a folder under %ProgramFiles% which says to me it’s built on the notion of always having rights to modify files in it’s folder structure. Normally I like to still deploy things to Program Files as it makes clean up easier, but in this case I’ll throw it into my C:\Utils folder. Like I mentioned, installing the license meant saving the saving the emailed XML license along side the unzipped executable files. I realize this tool is meant for developers, but a rough install process doesn’t usually bode well for the experience of the actual program. Admittedly this process isn’t so bad, but for being labeled as version 4 I’d think that some more thought would be put into an installer or having a way while running the application to install the license. On the flip side, keeping it as an XCopy deployment makes for including it in a code repository nice and simple for creating reports as part of a build.

With everything downloaded and having built RavenDB in regular Release mode (not one of the version specific releases, just plain “Release”), it’s time to kick open Visual NDepend. There’s a bouncing red arrow indicating I can open a Visual Studio project or solution. Okay, that makes it nice and easy.

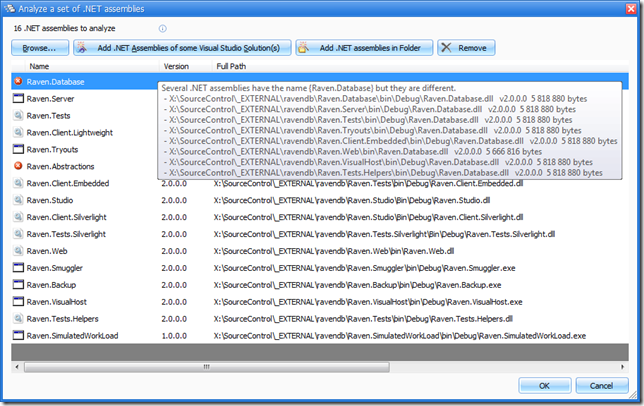

I click on the link and point it at the RavenDB solution file. That was pretty simple, except I’m presented with a few errors about having multiple, different versions of files:

Considering there’s test files and Silverlight versions I’m not too surprised there’s multiple, different files for those assemblies. I guess this approach might not be the best start. Maybe I’ll just start with RavenDB server. Removing all the assemblies from here I add everything in the Bin folder of the Raven.Server project. That is more than just the Raven assemblies, but I’m trying to understand what the dependencies on those other libraries are, correct? After clicking OK and letting it churn for a little bit, I’m presented with a “What do you want to do now?” type of dialog along with an HTML report:

I think for now I’ll show the Interactive UI Graph. I can definitely tell there’s a lot of information here, but this feels a little overwhelming at first glance.

I’m going to save actually digging into most of the information next time, but for now what I’m seeing in the main view:

- Based on the number of “N/A because one or several PDB file(s) N/A” remarks for things like Lines Of Code and Lines Of Comment, I’m I probably should have only pulled in those assemblies that are actually built by the project.

- Based on the “N/A because no coverage data specified” remarks I’m guessing I could include the unit test assemblies to show code coverage. Definitely have to explore that further.

- There seems to be a lot of items that violate the queries/rules. It’s not obvious why some of the rule groups have the orange-ish outline to them and other do not.

- As the mouse moves over lines and boxes in the graph, a context sensitive help box appears that gives a rough idea of what the item represents. The help box also has some links to dig deeper into understanding the relationships.

There’s definitely a lot to this program, but I think I’ve covered a good first impression of it so far. Not too bad without any actual instructions on how to use it.